Microsoft Unveils Maia 200 AI Accelerators To Boost Cloud AI Independence

hothardware.comDespite CEO Satya Nadella already having "a bunch of chips sitting in inventory" due to a shortage of power, Microsoft just announced its own next-gen AI silicon: the Maia 200 accelerator, built to run large models in the cloud faster and cheaper than what's in Azure today. It's part of a broader push to reduce dependence on third-party AI chips (like Nvidia's) by doing more of the hardware design in-house.

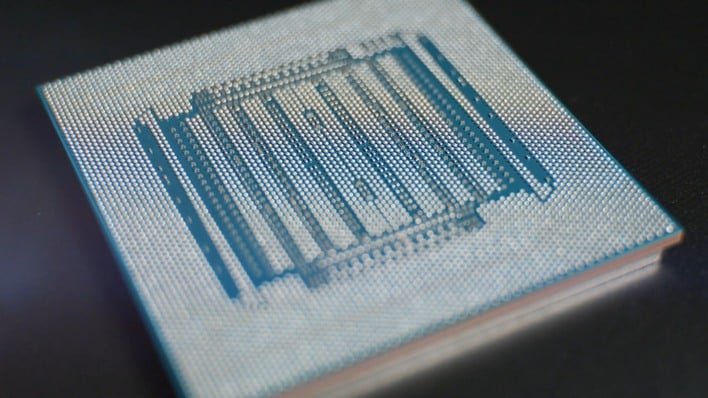

At its core, Maia 200 is an AI chip from Microsoft's own silicon team that is engineered specifically for running inference on big language models and other generative AI workloads. Unlike the first Maia (Maia 100), which was more of an early foray, Microsoft is purposely comparing this one against the competition right up front.

Maia 200 pushes some impressive numbers for inference workloads ...

Copyright of this story solely belongs to hothardware.com . To see the full text click HERE