HBF will integrate alongside HBM near AI accelerators, forming a tiered memory architecture.

techradar.comThe explosion of AI workloads has placed unprecedented pressure on memory systems, forcing companies to rethink how they deliver data to accelerators.

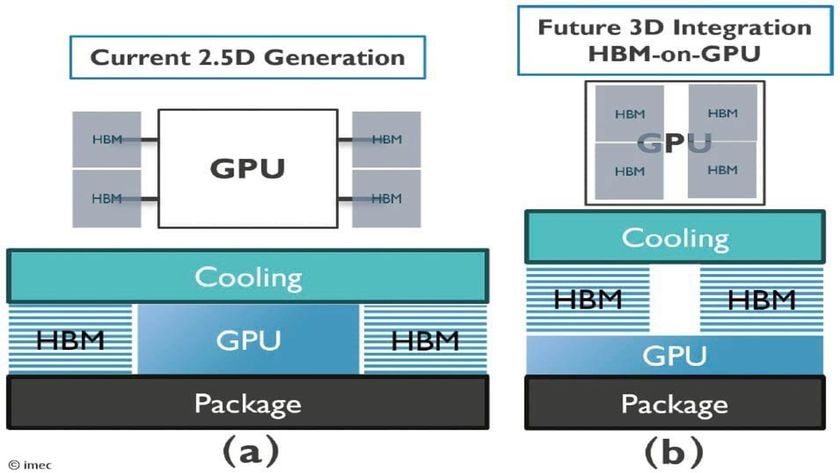

High-bandwidth memory (HBM) has served as a fast cache for GPUs, allowing AI tools to read and process key-value (KV) data efficiently.

However, HBM is expensive, fast, and limited in capacity, while high-bandwidth flash (HBF) offers much larger volume at slower speeds.

Copyright of this story solely belongs to techradar.com . To see the full text click HERE