Qualcomm Launches AI250 & AI200 With Huge Memory Footprint For AI Data Center Workloads

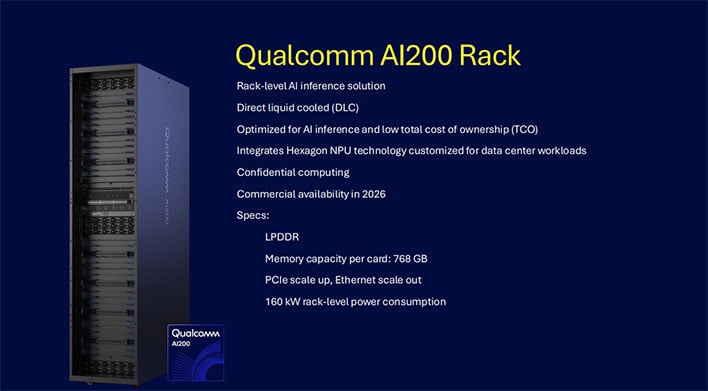

hothardware.comMajor technology firms are betting big on AI, creating huge demand for hardware, software, and services that underpin this massive, burgeoning market. That includes Qualcomm, which is rolling out new AI200 and AI250 chip-based accelerator cards. These are Qualcomm's next-generation AI inference-optimized solutions for data centers, and they bring with them support for gobs of memory.

This is key because AI has an insatiable appetite for memory (and storage), not just NPU horsepower. From Qualcomm's vantage point, the AI200 and AI250 deliver rack-scale performance and superior memory capacity at an industry-leading total cost of ownership (TCO), with optimized performance for large language models (LLMs) and large multimodal models (LMMs).

What that boils down to for the AI200 is support for up to 768GB of LPDDR per card to enable exceptional scale and flexibility for AI inference. Qualcomm's AI200 rack also integrates a hexagonal NPU and, as a ...

Copyright of this story solely belongs to hothardware.com . To see the full text click HERE