Meta’s AI chatbot guidelines leak raises questions about child safety

techradar.com

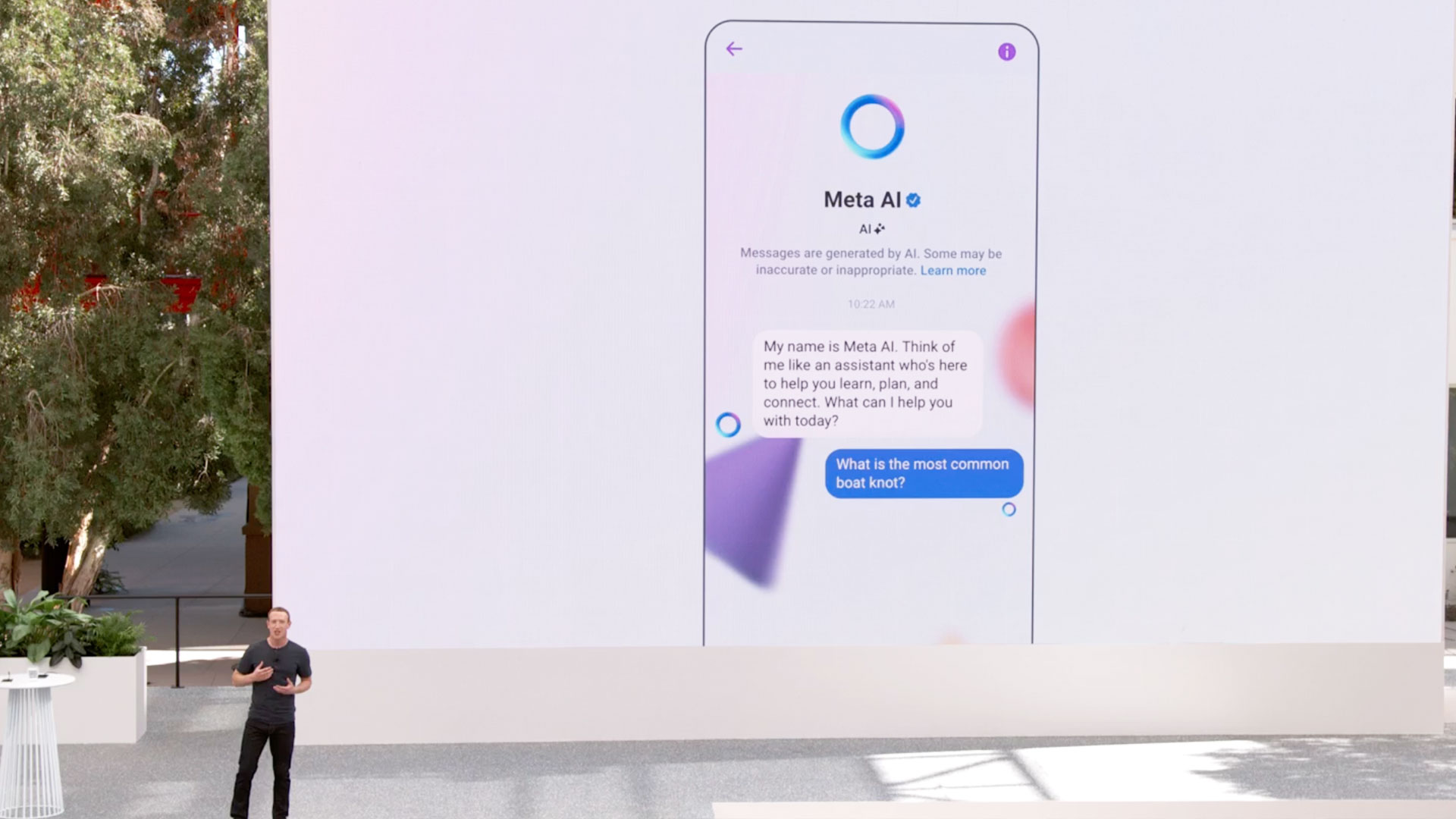

- A leaked Meta document revealed that the company’s AI chatbot guidelines once permitted inappropriate responses

- Meta confirmed the document’s authenticity and has since removed some of the most troubling sections

- Among calls for investigations is the question of how successful AI moderation can be

Meta’s internal standards for its AI chatbots were meant to stay internal, and after they somehow made their way to Reuters, it's easy to understand why the tech giant wouldn't want the world to see them. Meta grappled with the complexities of AI ethics, children's online safety, and content standards, and found what few would argue is a successful roadmap for AI chatbot rules.

Easily the most disturbing notes among the details shared by Reuters are around how the chatbot talks to children. As reported by Reuters, the document states that it's "acceptable [for the AI ...

Copyright of this story solely belongs to techradar.com . To see the full text click HERE