AI GPU accelerators with 6TB HBM memory could appear by 2035 as AI GPU die sizes set to shrink - but there's far worse coming up

techradar.com

- Future AI memory chips could demand more power than entire industrial zones combined

- 6TB of memory in one GPU sounds amazing until you see the power draw

- HBM8 stacks are impressive in theory, but terrifying in practice for any energy-conscious enterprise

The relentless drive to expand AI processing power is ushering in a new era for memory technology, but it comes at a cost that raises practical and environmental concerns, experts have warned.

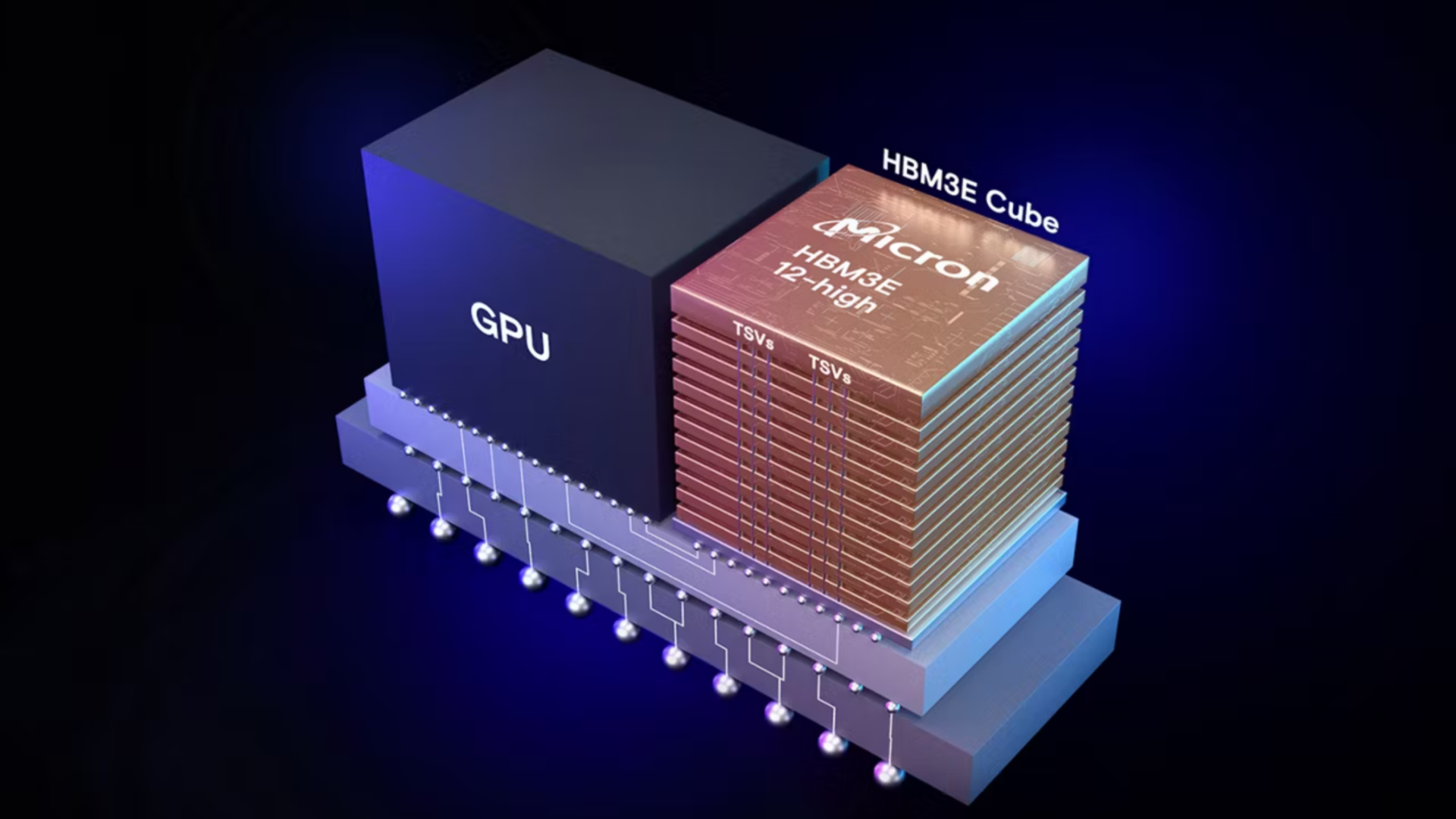

Research by Korea Advanced Institute of Science & Technology (KAIST) and Terabyte Interconnection and Package Laboratory (TERA) suggests by 2035, AI GPU accelerators equipped with 6TB of HBM could become a reality.

These developments, while technically impressive, also highlight the steep power demands and increasing complexity involved in pushing the boundaries of AI infrastructure.

Copyright of this story solely belongs to techradar.com . To see the full text click HERE